DevBlog: Camera Control

Camera

I’ve been working on some usability issues for Edict over the last couple of weeks. Perhaps the most prominent is the camera control system.

Free-look

Up to this point we’ve had a free-look camera with no constraints on orientation or position. It’s a reasonable choice if you’re only concerned about development because it’s straightforward to implement, and it lets you view arbitrary parts of the scene. Want to check that backface culling is working on your terrain? Just fly underground. Want to view the sun position? Just pan skyward.

However, this lets the user move to locations that should be inaccessible for gameplay or performance reasons (eg, underground, or viewing the entire map); orientation changes tends to accumulate a lot of unintended camera roll; and, reframing the view is unnecessarily finicky for players.

Overhead

I’ve always intended to lock the player’s view into something like the overhead style that’s common to many strategy games. Perhaps not directly above the terrain with a pure orthographic projection like in "RimWorld"[1], but closer to the isometric view of games like "Age of Empires".

While I do want to reduce some freedoms (like roll and pitch) it’s worthwhile retaining enough camera parameters to display some amount of visual depth in the world.

I’ve implemented a more complex variant of a system from a previous engine I developed. It takes some cues from physical camera systems that I’ve used over the years.

Parameters

We define the camera in terms of two parameters which are applied at a given height.

| height |

The elevation at which these parameters apply |

| fov |

The vertical field of view angle. |

| slope |

The vertical angle of the camera. ie, how much it tilts down. |

The FoV parameter aims to emulate the feel of a zoom lens on a camera. Longer focal lengths feel flatter and more compressed, while shorter focal lengths feel more expansive and draw you into the scene as a participant.

The slope parameter will be used to angle the camera very close to straight down when at maximum height so that it gives an appearance closer to that of a map, and to reduce any terrain or world obstructions. When the camera is zoomed in we move the camera a little closer to horizontal so that it feels more like they’re viewing a scene in the world rather than planning movements on a map.

These parameters are specified for the minimum and maximum permitted camera heights and linearly interpolated.[2]

The diagram above shows a camera viewing a target \(T\) at distance \(D\), across heights \(H_0 \dots H_1\), with slopes \(S_0 \dots S_1\).

Re-centering

Testing the system as described shows that it’s functional, but quickly becomes frustrating: the camera does not remain pointed at the same world position after we update any camera parameters.

If we simply update the camera parameters then the camera will almost certainly be pointed at a different region of the world. Even a simple height increase will result in the camera target moving further away.

The player has pointed it at some area of interest and we should not disrupt this without a good reason.

I found it valuable to reframe the problem away from the camera position, and focus more on the target position. The problem then becomes finding a camera world-position such that, when the camera parameters are updated, the camera looks directly at original target world-position.

This tends to result in a camera following an arc as the user zooms out (noted in the camera parameters diagram). ie, to increase the height of the camera and look at the same target we may need to move the camera slightly away from the target position.

Using the model

The player primarily uses the scroll wheel on their mouse as a "zoom" control. This directly controls the height of the camera.

When the height is adjusted we linearly interpolate these parameters, solve for the world position, and copy the values into our camera object.

Movement speed

When I was attempting to capture some animation footage it was immediately obvious that I’d forgotten to scale the panning speed of the camera. I found myself either slowly crawling around the terrain when zoomed out, or whizzing by a zombie/human fight when zoomed in.

The solution I’ve found useful here was to specify the panning speed as a fraction of the visible world. We know the view target, and we know the FoV angle, so we can calculate the extent of the visible terrain. [3] So if you want to spend 3 seconds panning across the visible scene then we divide that width by 3 for the panning speed.

Configuration

There’s not really a great deal of configuration we want to directly expose to the player. Some parameters will be dictated by performance constraints, others by our gameplay intentions.

However if you happen to know where to look you’ll see "yet-another-JSON file" that looks like the following.

{

"max" : {

"fov" : 45,

"height" : 200,

"slope" : -80

},

"min" : {

"fov" : 60,

"height" : 5,

"slope" : -35

}

}Height vs FoV

There are interesting second order effects that appear when the FoV parameter approaches zero.

My formulation of the camera system uses camera height as a proxy for camera zoom. That is: when you move the camera further away from the ground then objects tend to appear smaller; move the camera closer to the ground and objects appear larger.

However, you can achieve a similar outcome by decreasing the FoV of the camera. If the camera observes a smaller fraction of the scene, but the screen it’s presented on remains the same size, then objects will look larger.

This is what happens when we approach the maximum camera height in our system.

If we set an FoV range of 30..60 degrees then we start to see the scene scaling down as we initially increase the camera height, but then scaling back up as FoV effects start to dominate.

This should be something we can solve by some straightforward adjustments to the camera model. But at this point we will largely ignore the issue as the parameter values that feel best in Edict are outside the problematic range.

TODO

There’s still some work to be done before we hand out some binaries for pre-alpha testing; most important is probably sanding off the rougher edges from the initial GUI prototype. But it’s getting fairly close now. I’ll attend to some of these tasks over the coming week.

While largely uninteresting from a user perspective I’ve also spent parts of the last week or two integrating the new entity-component system into the engine. It should (eventually) lead to a modest performance gain, but perhaps more importantly it will simplify the implementation of systems like visualising building placement.

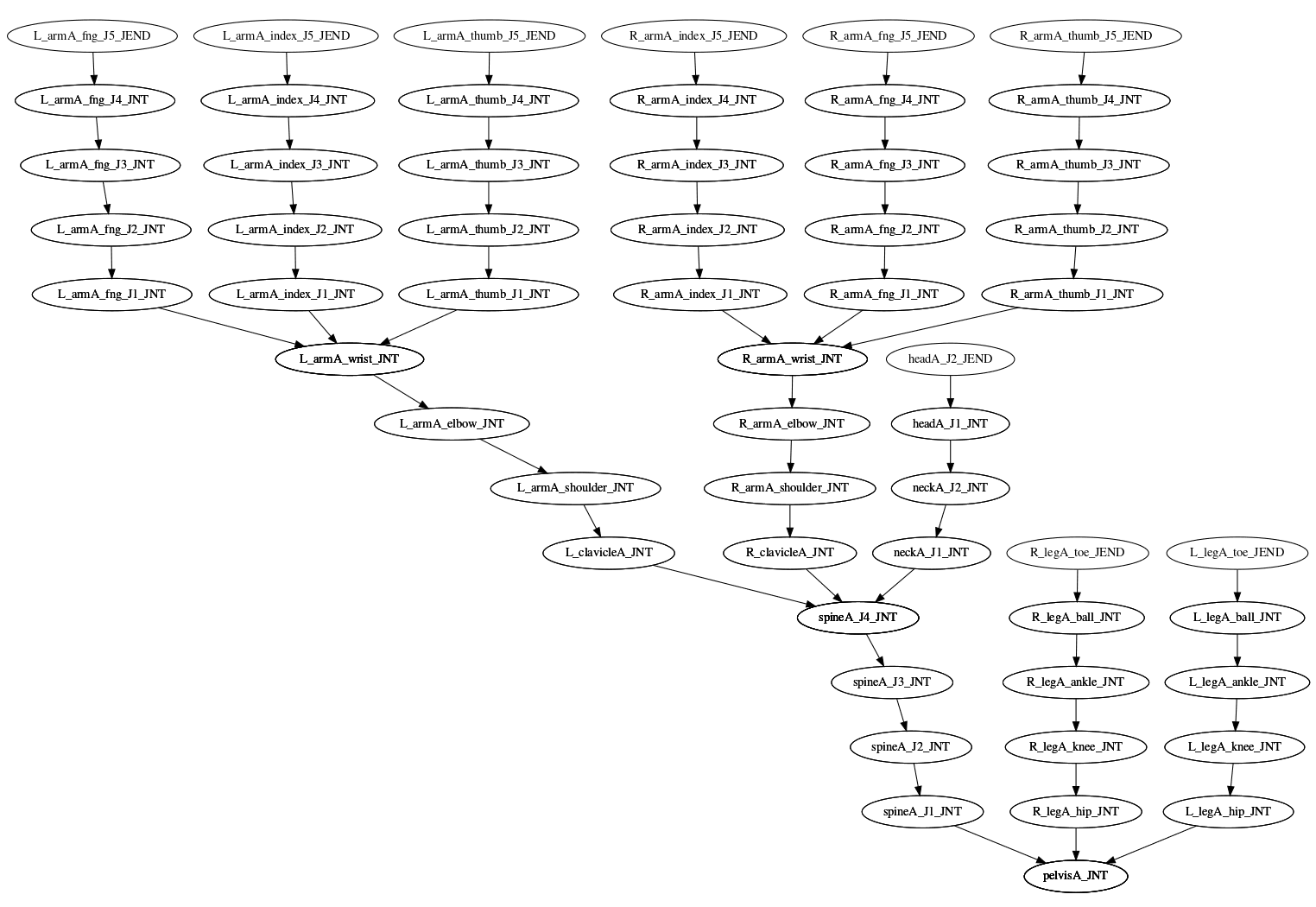

This work seems to have largely stabilised now and I should have some form of write-up on it next week.